AI Models & Privacy

Choose between local models (privacy) or frontier models (power)

Why Use Local Models?

✓ Privacy & Security

Your code and conversations never leave your machine

✓ One-Click Management

Download models and start servers with Cue's GUI - no terminal commands

✓ Offline Access

Work without internet connection once models are downloaded

✓ No API Costs

No usage limits, monthly fees, or per-token charges

Setup Options

Ollama (Recommended)

Easy-to-use desktop application for running local digital models

Install Ollama:

Then start Ollama service:

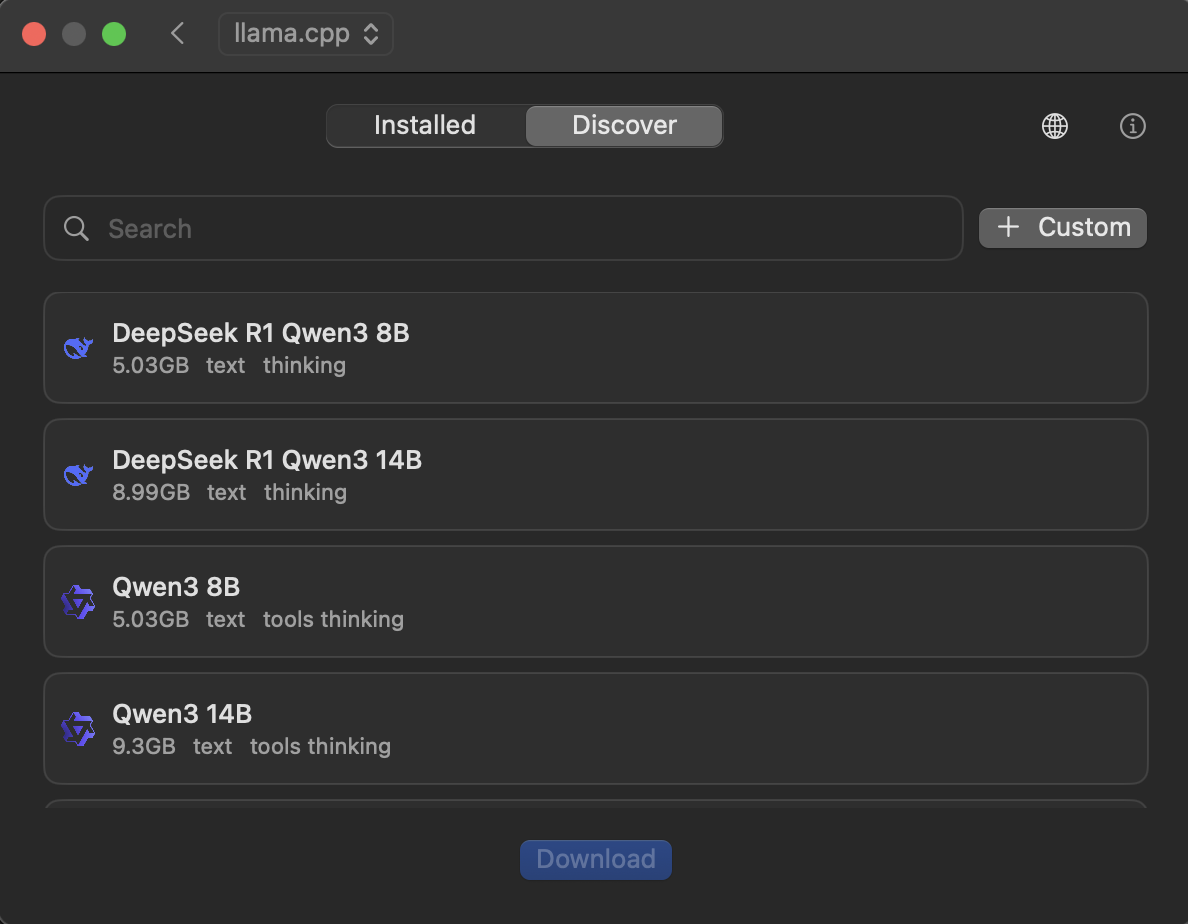

Model Discovery in Cue

Once Ollama is installed, Cue's built-in model discovery feature makes it easy to browse and download models directly from the app.

How it works:

- Open Cue and go to Settings → Providers

- Enable Ollama provider

- Browse available models in the Model Discovery section

- Select a model and click the Download button

- Start chatting once the download completes!

llama.cpp (Advanced)

High-performance C++ implementation for technical users

Install llama.cpp:

That's it! Cue handles the rest.

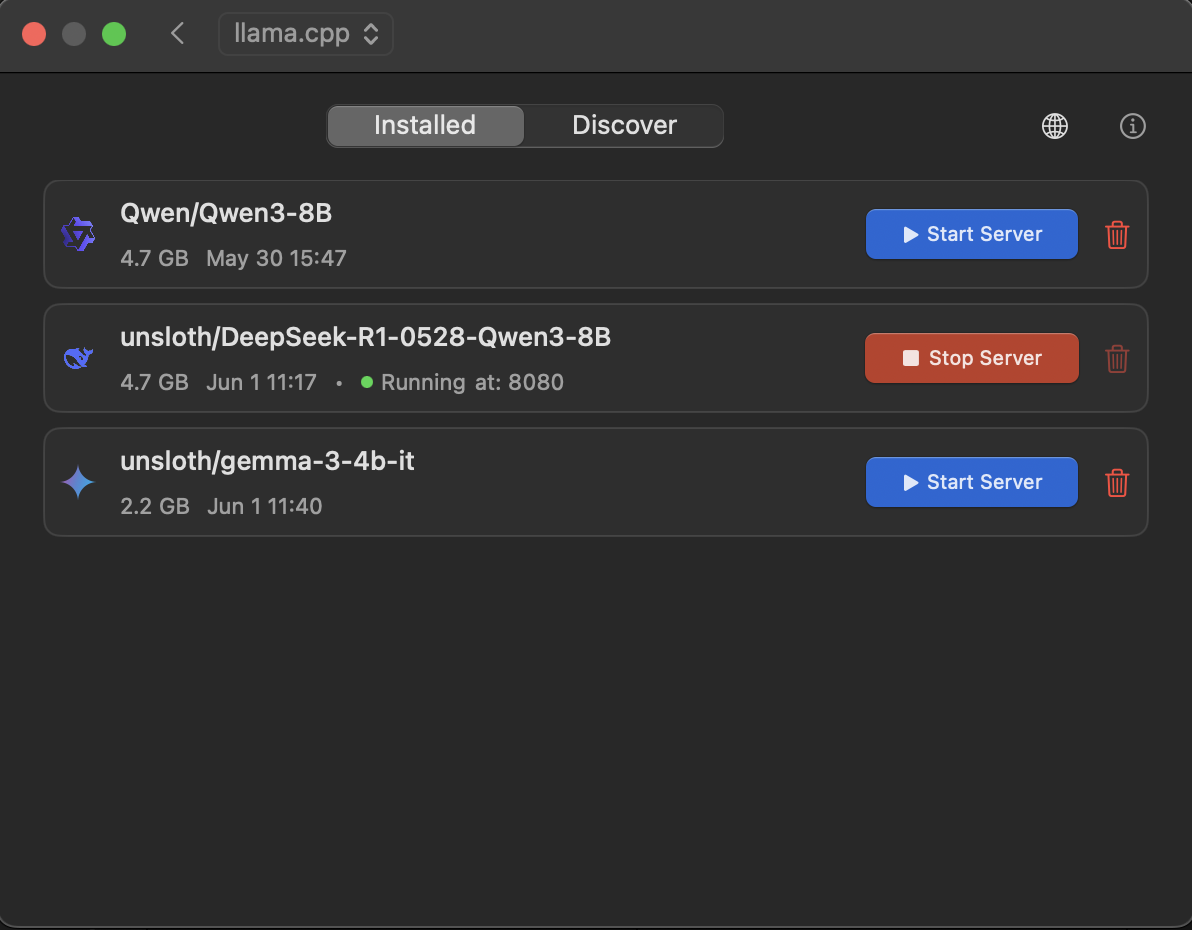

One-Click Model Management

Once llama.cpp is installed, Cue provides a complete GUI for downloading GGUF models and managing servers - no command line needed!

What Cue does for you:

- Browse and download GGUF models from HuggingFace

- Configure server settings (port, context size, Jinja templates)

- Start/stop servers with one click

- Monitor running servers and their status

- Automatically manage model paths and configurations